What happens when a test fails? If someone is manually running the test, then they will pause and poke around to learn more about the problem. However, when an automated test fails, the rest of the suite keeps running. Testers won’t get to view results until the suite is complete, and the automation won’t perform any extra exploration at the time of failure. Instead, testers must review logs and other artifacts gathered during testing, and they even might need to rerun the failed test to check if the failure is consistent.

Since testers typically rerun failed tests as part of their investigation, why not configure automated tests to automatically rerun failed tests? On the surface, this seems logical: automated retries can eliminate one more manual step. Unfortunately, automated retries can also enable poor practices, like ignoring legitimate issues.

So, are automated test retries good or bad? This is actually a rather controversial topic. I’ve heard many voices strongly condemn automated retries as an antipattern (see here, here, and here). While I agree that automated retries can be abused, I nevertheless still believe they can add value to test automation. A deeper understanding needs a nuanced approach.

So, how do automated retries work?

To avoid any confusion, let’s carefully define what we mean by “automated test retries.”

Let’s say I have a suite of 100 automated tests. When I run these tests, the framework will execute each test individually and yield a pass or fail result for the test. At the end of the suite, the framework will aggregate all the results together into one report. In the best case, all tests pass: 100/100.

However, suppose that one of the tests fails. Upon failure, the test framework would capture any exceptions, perform any cleanup routines, log a failure, and safely move onto the next test case. At the end of the suite, the report would show 99/100 passing tests with one test failure.

By default, most test frameworks will run each test one time. However, some test frameworks have features for automatically rerunning test cases that fail. The framework may even enable testers to specify how many retries to attempt. So, let’s say that we configure 2 retries for our suite of 100 tests. When that one test fails, the framework would queue that failing test to run twice more before moving onto the next test. It would also add more information to the test report. For example, if one retry passed but another one failed, the report would show 99/100 passing tests with a 1/3 pass rate for the failing test.

In this article, we will focus on automated retries for test cases. Testers could also program other types of retries into automated tests, such as retrying browser page loads or REST requests. Interaction-level retries require sophisticated, context-specific logic, whereas test-level retry logic works the same for any kind of test case. (Interaction-level retries would also need their own article.)

Automated retries can be a terrible antipattern

Let’s see how automated test retries can be abused:

Jeremy is a member of a team that runs a suite of 300 automated tests for their web app every night. Unfortunately, the tests are notoriously flaky. About a dozen different tests fail every night, and Jeremy spends a lot of time each morning triaging the failures. Whenever he reruns failed tests individually on his laptop, they almost always pass.

To save himself time in the morning, Jeremy decides to add automatic retries to the test suite. Whenever a test fails, the framework will attempt one retry. Jeremy will only investigate tests whose retries failed. If a test had a passing retry, then he will presume that the original failure was just a flaky test.

Ouch! There are several problems here.

First, Jeremy is using retries to conceal information rather than reveal information. If a test fails but its retries pass, then the test still reveals a problem! In this case, the underlying problem is flaky behavior. Jeremy is using automated retries to overwrite intermittent failures with intermittent passes. Instead, he should investigate why the test are flaky. Perhaps automated interactions have race conditions that need more careful waiting. Or, perhaps features in the web app itself are behaving unexpectedly. Test failures indicate a problem – either in test code, product code, or infrastructure.

Second, Jeremy is using automated retries to perpetuate poor practices. Before adding automated retries to the test suite, Jeremy was already manually retrying tests and disregarding flaky failures. Adding retries to the test suite merely speeds up the process, making it easier to sidestep failures.

Third, the way Jeremy uses automated retries indicates that the team does not value their automated test suite very much. Good test automation requires effort and investment. Persistent flakiness is a sign of neglect, and it fosters low trust in testing. Using retries is merely a “band-aid” on both the test failures and the team’s attitude about test automation.

In this example, automated test retries are indeed a terrible antipattern. They enable Jeremy and his team to ignore legitimate issues. In fact, they incentivize the team to ignore failures because they institutionalize the practice of replacing red X’s with green checkmarks. This team should scrap automated test retries and address the root causes of flakiness.

Automated retries are not the main problem

Ignoring flaky failures is unfortunately all too common in the software industry. I must admit that in my days as a newbie engineer, I was guilty of rerunning tests to get them to pass. Why do people do this? The answer is simple: intermittent failures are difficult to resolve.

Testers love to find consistent, reproducible failures because those are easy to explain. Other developers can’t push back against hard evidence. However, intermittent failures take much more time to isolate. Root causes can become mind-bending puzzles. They might be triggered by environmental factors or awkward timings. Sometimes, teams never figure out what causes them. In my personal experience, bug tickets for intermittent failures get far less traction than bug tickets for consistent failures. All these factors incentivize folks to turn a blind eye to intermittent failures when convenient.

Automated retries are just a tool and a technique. They may enable bad practices, but they aren’t inherently bad. The main problem is willfully ignoring certain test results.

Automated retries can be incredibly helpful

So, what is the right way to use automated test retries? Use them to gather more information from the tests. Test results are simply artifacts of feedback. They reveal how a software product behaved under specific conditions and stimuli. The pass-or-fail nature of assertions simplifies test results at the top level of a report in order to draw attention to failures. However, reports can give more information than just binary pass-or-fail results. Automated test retries yield a series of results for a failing test that indicate a success rate.

For example, SpecFlow and the SpecFlow+ Runner make it easy to use automatic retries the right way. Testers simply need to add the retryFor setting to their SpecFlow+ Runner profile to set the number of retries to attempt. In the final report, SpecFlow records the success rate of each test with color-coded counts. Results are revealed, not concealed.

This information jumpstarts analysis. As a tester, one of the first questions I ask myself about a failing test is, “Is the failure reproducible?” Without automated retries, I need to manually rerun the test to find out – often at a much later time and potentially within a different context. With automated retries, that step happens automatically and in the same context. Analysis then takes two branches:

- If all retry attempts failed, then the failure is probably consistent and reproducible. I would expect it to be a clear functional failure that would be fast and easy to report. I jump on these first to get them out of the way.

- If some retry attempts passed, then the failure is intermittent, and it will probably take more time to investigate. I will look more closely at the logs and screenshots to determine what went wrong. I will try to exercise the product behavior manually to see if the product itself is inconsistent. I will also review the automation code to make sure there are no unhandled race conditions. I might even need to rerun the test multiple times to measure a more accurate failure rate.

I do not ignore any failures. Instead, I use automated retries to gather more information about the nature of the failures. In the moment, this extra info helps me expedite triage. Over time, the trends this info reveals helps me identify weak spots in both the product under test and the test automation.

Automated retries are most helpful at high scale

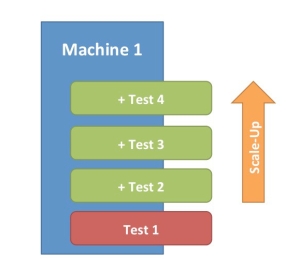

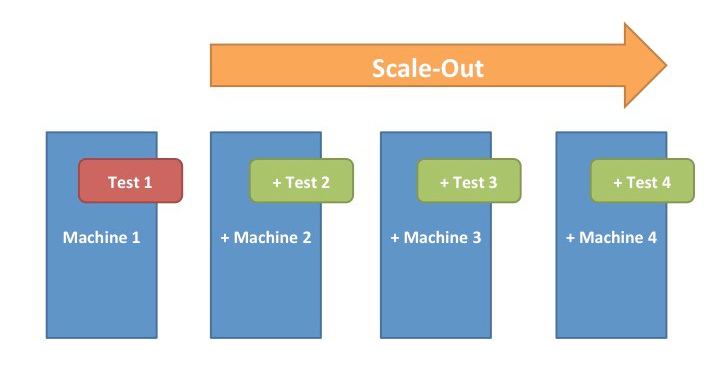

When used appropriate, automated retries can be helpful for any size test automation project. However, they are arguably more helpful for large projects running tests at high scale than small projects. Why? Two main reasons: complexities and priorities.

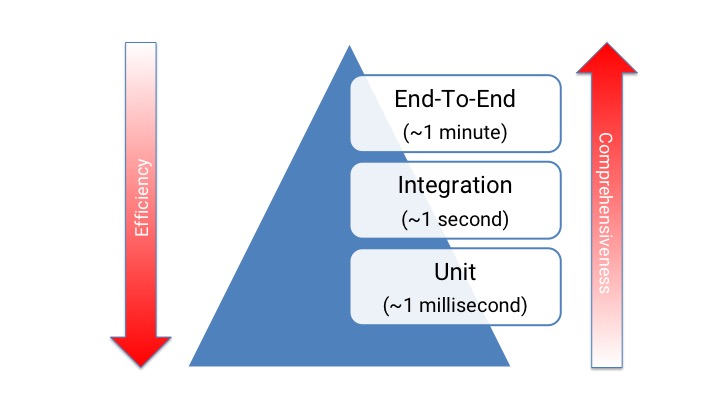

Large-scale test projects have many moving parts. For example, at PrecisionLender, we presently run 4K-10K end-to-end tests against our web app every business day. (We also run ~100K unit tests every business day.) Our tests launch from TeamCity as part of our Continuous Integration system, and they use in-house Selenium Grid instances to run 50-100 tests in parallel. The PrecisionLender application itself is enormous, too.

Intermittent failures are inevitable in large-scale projects for many different reasons. There could be problems in the test code, but those aren’t the only possible problems. At PrecisionLender, Boa Constrictor already protects us from race conditions, so our intermittent test failures are rarely due to problems in automation code. Other causes for flakiness include:

- The app’s complexity makes certain features behave inconsistently or unexpectedly

- Extra load on the app slows down response times

- The cloud hosting platform has a service blip

- Selenium Grid arbitrarily chokes on a browser session

- The DevOps team recycles some resources

- An engineer makes a system change while tests were running

- The CI pipeline deploys a new change in the middle of testing

Many of these problems result from infrastructure and process. They can’t easily be fixed, especially when environments are shared. As one tester, I can’t rewrite my whole company’s CI pipeline to be “better.” I can’t rearchitect the app’s whole delivery model to avoid all collisions. I can’t perfectly guarantee 100% uptime for my cloud resources or my test tools like Selenium Grid. Some of these might be good initiatives to pursue, but one tester’s dictates do not immediately become reality. Many times, we need to work with what we have. Curt demands to “just fix the tests” come off as pedantic.

Automated test retries provide very useful information for discerning the nature of such intermittent failures. For example, at PrecisionLender, we hit Selenium Grid problems frequently. Roughly 1/10000 Selenium Grid browser sessions will inexplicably freeze during testing. We don’t know why this happens, and our investigations have been unfruitful. We chalk it up to minor instability at scale. Whenever the 1/10000 failure strikes, our suite’s automated retries kick in and pass. When we review the test report, we see the intermittent failure along with its exception method. Based on its signature, we immediately know that test is fine. We don’t need to do extra investigation work or manual reruns. Automated retries gave us the info we needed.

(Image source: LambdaTest.)

Another type of common failure is intermittently slow performance in the PrecisionLender application. Occasionally, the app will freeze for a minute or two and then recover. When that happens, we see a “brick wall” of failures in our report: all tests during that time frame fail. Then, automated retries kick in, and the tests pass once the app recovers. Automatic retries prove in the moment that the app momentarily froze but that the individual behaviors covered by the tests are okay. This indicates functional correctness for the behaviors amidst a performance failure in the app. Our team has used these kinds of results on multiple occasions to identify performance bugs in the app by cross-checking system logs and database queries during the time intervals for those brick walls of intermittent failures. Again, automated retries gave us extra information that helped us find deep issues.

Automated retries delineate failure priorities

That answers complexity, but what about priority? Unfortunately, in large projects, there is more work to do than any team can handle. Teams need to make tough decisions about what to do now, what to do later, and what to skip. That’s just business. Testing decisions become part of that prioritization.

In almost all cases, consistent failures are inherently a higher priority than intermittent failures because they have a greater impact on the end users. If a feature fails every single time it is attempted, then the user is blocked from using the feature, and they cannot receive any value from it. However, if a feature works some of the time, then the user can still get some value out of it. Furthermore, the rarer the intermittency, the lower the impact, and consequentially the lower the priority. Intermittent failures are still important to address, but they must be prioritized relative to other work at hand.

Automated test retries automate that initial prioritization. When I triage PrecisionLender tests, I look into consistent “red” failures first. Our SpecFlow reports make them very obvious. I know those failures will be straightforward to reproduce, explain, and hopefully resolve. Then, I look into intermittent “orange” failures second. Those take more time. I can quickly identify issues like Selenium Grid disconnections, but other issues may not be obvious (like system interruptions) or may need additional context (like the performance freezes). Sometimes, we may need to let tests run for a few days to get more data. If I get called away to another more urgent task while I’m triaging results, then at least I could finish the consistent failures. It’s a classic 80/20 rule: investigating consistent failures typically gives more return for less work, while investigating intermittent failures gives less return for more work. It is what it is.

The only time I would prioritize an intermittent failure over a consistent failure would be if the intermittent failure causes catastrophic or irreversible damage, like wiping out an entire system, corrupting data, or burning money. However, that type of disastrous failure is very rare. In my experience, almost all intermittent failures are due to poorly written test code, automation timeouts from poor app performance, or infrastructure blips.

Context matters

Automated test retries can be a blessing or a curse. It all depends on how testers use them. If testers use retries to reveal more information about failures, then retries greatly assist triage. Otherwise, if testers use retries to conceal intermittent failures, then they aren’t doing their jobs as testers. Folks should not be quick to presume that automated retries are always an antipattern. We couldn’t achieve our scale of testing at PrecisionLender without them. Context matters.