This case study was written by Andrew Knight, Lead Software Engineer in Test for Q2’s PrecisionLender product, in collaboration with Q2 and Tricentis. It explains the PrecisionLender team’s continuous testing journey and how SpecFlow served as a cornerstone for success.

What is PrecisionLender?

PrecisionLender is a web application that empowers commercial bankers with in-the-moment insights that help them structure and price commercial deals. Andi®, PrecisionLender’s intelligent virtual analyst, delivers these hyper-focused recommendations in real-time, allowing relationship managers to make data-driven decisions while pricing their commercial deals. PrecisionLender is owned and developed by Q2, a financial experience software company dedicated to providing digital banking and lending solutions to banks, credit unions, alternative finance, and fintech companies in the U.S. and internationally.

(Picture taken from the PrecisionLender Support Center)

The starting point

The PrecisionLender team had a robust Continuous Integration (CI) delivery pipeline with strong unit test coverage, but they lacked end-to-end feature coverage. Developers would fill this gap by manually inspecting their changes in a shared development environment. However, as the PrecisionLender app grew, manual checks could not cover all possible integrations. The team knew they needed continuous automated testing to provide a safety net for development to remain lean and efficient. In April 2018, they hired Andrew Knight as their first Software Engineer in Test (SET) – a new role for the company – to lead the effort.

Automating tests with SpecFlow

The PrecisionLender team developed the Boa test solution – a project for automating end-to-end tests at scale. Boa would become PrecisionLender’s internal platform for test automation development. The name “Boa” is a loose acronym for “Behavior-Oriented Automation.”

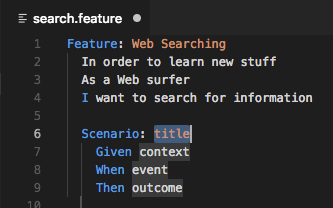

The team chose SpecFlow to be the core framework for Boa tests. Since the PrecisionLender app’s backend is developed using .NET, SpecFlow was a natural fit. SpecFlow’s Gherkin syntax made tests readable and understandable, even to product owners and product support specialists who do not code.

The SpecFlow framework integrates with tools like Selenium WebDriver for testing Web UIs and RestSharp for testing REST APIs to exercise vital pathways for thorough app coverage. SpecFlow’s dependency injection mechanisms are solid yet simple, and the online docs are thorough. Plus, SpecFlow is an open-source project, so anyone can look at its code to learn how things work, open requests for new features, and even offer code contributions.

Executing tests with SpecFlow+ Runner

Writing good tests was only part of the challenge. The PrecisionLender team needed to execute Boa tests continuously to provide fast feedback on changes to the app. The team chose to run Boa tests using SpecFlow+ Runner, which is tailored for SpecFlow tests. The team uses SpecFlow+ Runner to launch tests in parallel in TeamCity any time a developer deploys a code change to internal pre-production environments. The entire test suite also runs every night against multiple product configurations. SpecFlow+ Runner produces a helpful test report with everything needed to triage test failures: pass-and-fail tallies overall and per feature, a visual execution timeline, and full system logs. If engineers need to investigate certain failures more closely, they can use SpecFlow tags and SpecFlow+ Runner profiles to selectively filter tests for reruns. SpecFlow+ Runner’s multiple features help the team expedite test execution and investigation.

Sharing features with SpecFlow+ LivingDoc

Good test cases are more than just verification procedures – they are behavior specifications. They define how features should work. Instead of keeping testing work siloed by role, the PrecisionLender team wanted to share Boa tests as behavior specs with all stakeholders to foster greater collaboration and understanding around features. The team also wanted to share Boa tests with specific customers without sharing the entire automation code.

SpecFlow+ LivingDoc enabled the PrecisionLender team to turn Gherkin feature files into living documentation. Whereas the SpecFlow+ Runner report focuses on automation execution, the SpecFlow+ LivingDoc report focuses on behavior specification apart from coding and automation details. LivingDoc displays Gherkin scenarios in a readable, searchable way that both internal folks and customers can consume. It can also optionally include high-level pass-and-fail results for each scenario, providing just enough information to be helpful and not overwhelming. LivingDoc has also helped PrecisionLender’s engineers identify and eliminate unused step definitions within the automation code. PrecisionLender benefits greatly from complementary reports from SpecFlow+ Runner and SpecFlow+ LivingDoc.

Improving interactions with Boa Constrictor

The Boa test solution initially used the Page Object Model to model interactions with the PrecisionLender app. However, as the PrecisionLender team automated more and more Boa tests, it became apparent that page objects did not scale well. Many page object classes had duplicative methods, making automation code messy. Some methods also did not include appropriate waiting mechanisms, introducing flaky failures.

PrecisionLender’s SETs developed Boa Constrictor, a .NET implementation of the Screenplay Pattern, to make better interactions for better automation. In Screenplay, actors use abilities to perform interactions. For example, an ability could be using Selenium WebDriver, and an interaction could be clicking an element. The Screenplay Pattern can be seen as a refactoring of the Page Object Model that minimizes duplicate code through a better separation of concerns. Individual interactions can be hardened for robustness, eliminating flaky hotspots. The Boa test solution now exclusively uses Boa Constrictor for interactions.

In October 2020, Q2 released Boa Constrictor as an open-source project so that anyone can use it. It is fully compatible with SpecFlow and other .NET test frameworks, and it provides rich interactions for Selenium WebDriver and RestSharp out of the box.

Scaling massively with Selenium Grid

When the PrecisionLender team first started automating Boa tests, they ran tests one at a time. That soon became too slow since the average Boa test took 20 to 50 seconds to complete. The team then started running up to 3 tests in parallel on one machine, but that also was not fast enough. They turned to Selenium Grid, a tool for running WebDriver sessions remotely across multiple machines.

PrecisionLender built a set of internal Selenium Grid instances using Microsoft Azure virtual machines to run Boa tests at high scale. As of July 2021, PrecisionLender has over 1800 unique Boa tests that run across four distinct product configurations. Whenever TeamCity detects a code change, it triggers a “continuous” Boa test suite with over 1000 tests running 50 parallel tests using Google Chrome on Selenium Grid. It completes execution in about 10 minutes. TeamCity launches the full test suite every night against all product configurations with 64-100 parallel tests on Selenium Grid. Continuous Integration currently runs up to 10K Boa tests daily against the PrecisionLender app with SpecFlow+ Runner and Selenium Grid.

Shifting left with BDD

Better testing and automation practices eventually inspired better development practices. Product owners would create user stories, but developers would struggle to understand requirements and business purposes fully. PrecisionLender’s SETs started bringing together the Three Amigos – business, development, and testing roles – to discuss product behaviors proactively while creating user stories. They introduced Behavior-Driven Development (BDD) activities like Example Mapping to explore behaviors together. Then, well-defined stories could be easily connected to SpecFlow tests written in Gherkin following Specification by Example (SBE). Teams repeatedly saved time by thinking before coding and specifying before testing. They built higher quality into features from the beginning, and they stopped before working on half-baked stories with unjustified value propositions. Developers who participated in these behavior-driven practices were also more likely to automate Boa tests on their own. Furthermore, one of PrecisionLender’s developers loved BDD practices so much that he joined the team of SETs! Through Gherkin, SpecFlow provided a foundation that enabled quality work to shift left.

Challenges along the way

Achieving true continuous testing had its challenges along the way. Intermittent failure was the most significant issue PrecisionLender faced at scale. With so many tests, environments, and infrastructural pieces, arbitrary failures were statistically unavoidable. The PrecisionLender team took a two-pronged approach to handle intermittent failures: (1) eliminate race conditions in automation using good interactions with Boa Constrictor, and (2) use SpecFlow+ Runner to automatically retry failed tests to determine if failures were consistent or intermittent. These two approaches reduced the frequency of flaky failures and helped engineers quickly resolve any remaining issues. As a result, Boa tests enjoy well above a 99% success rate, and most failures are due to actual bugs.

PrecisionLender app performance at scale was a second big challenge. Running up to 100 tests in parallel turned functional tests into de facto load tests. Testing at scale repeatedly uncovered performance bottlenecks in the app. Performance issues caused widespread test failures that were difficult to diagnose because they appeared intermittently. Still, the visual timeline and timestamps in the SpecFlow+ Runner report helped the team identify periods of failure that could be crosschecked against backend logs, metrics, and database queries. Developers resolved many performance issues and significantly boost the app’s response times and load capacity.

Training team members to develop solid test automation was the third challenge. At the start of the journey, test automation, Gherkin, and BDD were all new to PrecisionLender. The PrecisionLender SETs took active steps to train others on how to develop good tests and good automation through group workshops, Three Amigos meetings, and one-on-one mentoring sessions. They shared resources like the Automation Panda blog for how to write good tests and good Gherkin. The investment in education paid off: many developers have joined the SETs in writing readable, reliable Boa tests that run continuously.

Benefits to the business

Developing a continuous testing solution brought many incredible benefits to PrecisionLender. First, the quality of the PrecisionLender app improved because continuous testing provided fast feedback on failures that developers could quickly fix. Instead of relying on manual spot checks, the team could trust the comprehensive safety net of Boa tests to catch bugs. Many issues would be caught within an hour of a developer making a code commit, and the longest feedback cycle would be only one business day for the full nightly test suites to run. Boa tests catch failures before customers ever experience them. The continuous nature of testing enables PrecisionLender to publish new releases every two weeks.

Second, the high reliability of the Boa test solution means that the PrecisionLender team can trust test results. When a test passes, the behavior is working. When a test fails, there is a real bug. Reliability also means that engineers spend less time on automation maintenance and more time on more valuable activities, like developing new features and adding new tests. Quality is present in both the product code and the test code.

Third, continuous testing boosts customer confidence in PrecisionLender. Customers trust the software quality because they know that PrecisionLender thoroughly tests every release. The PrecisionLender team also shares SpecFlow+ LivingDoc reports with specific clients to prove quality.

A bright future

PrecisionLender’s continuous testing journey is not over. Since the PrecisionLender team hired its first SET, it has hired three more, in addition to a testing manager, to grow quality improvement efforts. Multiple development teams have written their own Boa tests, and they plan to write more tests independently. SpecFlow’s tools have been indispensable in helping the PrecisionLender team achieve successful quality assurance. As PrecisionLender welcomes more customers, the Boa solution will be ready to scale with more tests, more configurations, and more executions.