Many of you know me as the “Automation Panda” through my blog, my Twitter handle, or my online courses. Maybe you’ve attended one of my conference talks. I love connecting with others, but many people don’t get to know me personally. Behind my black-and-white façade, I’m a regular guy. When I’m not programming, I enjoy cooking and video gaming. I’m also currently fixing up a vintage Volkswagen Beetle. However, for nearly two years, I’ve suffered a skin rash that will not go away. I haven’t talked about it much until recently, when it became unbearable.

For a while, things turned bad. Thankfully, things are a little better now. I’d like to share my journey publicly because it helps to humanize folks in tech like me. We’re all people, not machines. Vulnerability is healthy. My journey also reminded me of a few important tenants for testing software. I hope you’ll read my story, and I promise to avoid the gross parts.

Distant Precursors

I’ve been blessed with great health and healthcare my entire life. However, when I was a teenager, I had a weird skin issue: the skin around my right eye socket turned dry, itchy, and red. Imagine dandruff, but on your face – it was flaky like a bad test (ha!). Lotions and creams did nothing to help. After it persisted for several weeks, my parents scheduled an appointment with my pediatrician. They didn’t know what caused it, but they gave me a sample tube of topical steroids to try. The steroids worked great. The skin around my eye cleared up and stayed normal. This issue resurfaced again while I was in college, but it went away on its own after a month or two.

Rise of the Beast

Around October 2019, I noticed this same rash started appearing again around my right eye for the first time in a decade. The exact date is fuzzy in my mind, but I remember it started before TestBash San Francisco 2019. At that time, my response was to ignore it. I’d continue to keep up my regular hygiene (like washing my face), and eventually it would go away like last time.

Unfortunately, the rash got worse. It started spreading to my cheek and my forehead. I started using body lotion on it, but it would burn whenever I’d apply it, and the rash would persist. My wife started trying a bunch of fancy, high-dollar lotions (like Kiehl’s and other brands I didn’t know), but they didn’t help at all. By Spring 2020, my hands and forearms started breaking out with dry, itchy red spots, too. These were worse: if I scratched them, they would bleed. I also remember taking my morning shower one day and thinking to myself, “Gosh, my whole body is super itchy!”

I had enough, so I visited a local dermatologist. He took one look at my face and arms and prescribed topical steroids. He told me to use them for about four weeks and then stop for two weeks before a reevaluation. The steroids partially worked. The itching would subside, but the rash wouldn’t go away completely. When I stopped using the steroids, the rash returned to the same places. I also noticed the rash slowly spreading, too. Eventually, it crept up my upper arms to my neck and shoulders, down my back and torso, and all the way down to my legs.

On a second attempt, the dermatologist prescribed a much stronger topical steroid in addition to a round of oral steroids. My skin healed much better with the stronger medicine, but, inevitably, when I stopped using them, the rash returned. By now, patches of the rash could be found all over my body, and they itched like crazy. I couldn’t wear white shirts anymore because spots would break out and bleed, as if I nicked myself while shaving. I don’t remember the precise timings, but I also asked the dermatologist to remove a series of three moles on my body that became infected badly by the rash.

Fruitless Mitigations

As a good tester, I wanted to know the root cause for my rash. Was I allergic to something? Did I have a deeper medical issue? Steroids merely addressed the symptoms, and they did a mediocre job at best. So, I tried investigating what triggered my rash.

When the dry patch first appeared above my eye, I suspected cold, dry weather. “Maybe the crisp winter air is drying my skin too much.” That’s when I tried using an assortment of creams. When the rash started spreading, that’s when I knew the cause was more than winter weather.

Then, my mind turned to allergies. I knew I was allergic to pet dander, but I never had reactions to anything else before. At the same time the rash started spreading from my eye, I noticed I had a small problem with mangos. Whenever I would bite into a fresh mango, my lips would become severely, painfully chapped for the next few days. I learned from reading articles online that some folks who are allergic to poison ivy (like me) are also sensitive to mangos because both plants contain urushiol in their skin and sap. At that time, my family and I were consuming a boxful of mangos. I immediately cut out mangos and hoped for the best. No luck.

When the rash spread to my whole body, I became serious about finding the root cause. Every effect has a cause, and I wanted to investigate all potential causes in order of likelihood. I already crossed dry weather and mangoes off the list. Since the rash appeared in splotches across my whole body, then I reasoned its trigger could either be external – something coming in contact with all parts of skin – or internal – something not right from the inside pushing out.

What comes in contact with the whole body? Air, water, and cloth. Skin reactions to things in air and water seemed unlikely, so I focused on clothing and linens. That’s when I remembered a vague story from childhood. When my parents taught me how to do laundry, they told me they used a scent-free, hypoallergenic detergent because my dad had a severe skin reaction to regular detergent one time long before I was born. Once I confirmed the story with my parents, I immediately sprung to action. I switched over my detergent and fabric softener. I rewashed all my clothes and linens – all of them. I even thoroughly cleaned out my dryer duct to make sure no chemicals could leech back into the machine. (Boy, that was a heaping pile of dust.) Despite these changes, my rash persisted. I also changed my soaps and shampoos to no avail.

At the same time, I looked internally. I read in a few online articles that skin rashes could be caused by deficiencies. I started taking a daily multivitamin. I also tried supplements for calcium, Vitamin B6, Vitamin D, and collagen. Although I’m sure my body was healthier as a result, none of these supplements made a noticeable difference.

My dermatologist even did a skin punch test. He cut a piece of skin out of my back about 3mm wide through all layers of the skin. The result of the biopsy was “atopic dermatitis.” Not helpful.

For relief, I tried an assortment of creams from Eucerin, CeraVe, Aveeno, and O’Keeffe’s. None of them staved off the persistent itching or reduced the redness. They were practically useless. The only cream that had any impact (other than steroids) was an herbal Chinese medicine. With a cooling burn, it actually stopped the itch and visibly reduced the redness. By Spring 2021, I stopped going to the dermatologist and simply relied on the Chinese cream. I didn’t have a root cause, but I had an inexpensive mitigation that was 差不多 (chà bù duō; “almost even” or “good enough”).

Insufferability

Up until Summer 2021, my rash was mostly an uncomfortable inconvenience. Thankfully, since everything shut down for the COVID pandemic, I didn’t need to make public appearances with unsightly skin. The itchiness was the worst symptom, but nobody would see me scratch at home.

Then, around the end of June 2021, the rash got worse. My whole face turned red, and my splotches became itchier than ever. Worst of all, the Chinese cream no longer had much effect. Timing was lousy, too, since my wife and I were going to spend most of July in Seattle. I needed medical help ASAP. I needed to see either my primary care physician or an allergist. I called both offices to schedule appointments. The wait time for my primary doctor was 1.5 months, while the wait time for an allergist was 3 months! Even if I wouldn’t be in Seattle, I couldn’t see a doctor anyway.

My rash plateaued while in Seattle. It was not great, but it didn’t stop me from enjoying our visit. I was okay during a quick stop in Salt Lake City, too. However, as soon as I returned home to the Triangle, the rash erupted. It became utterly unbearable – to the point where I couldn’t sleep at night. I was exhausted. My skin was raw. I could not focus on any deep work. I hit the point of thorough debilitation.

When I visited my doctor on August 3, she performed a series of blood tests. Those confirmed what the problem was not:

- My metabolic panel was okay.

- My cholesterol was okay.

- My thyroid was okay.

- I did not have celiac disease (gluten intolerance).

- I did not have hepatitis C.

Nevertheless, these results did not indicate any culprit. My doctor then referred me to an allergist for further testing on August 19.

The two weeks between appointments was hell. I was not allowed to take steroids or antihistamines for one week before the allergy test. My doctor also prescribed me hydroxyzine under the presumption that the root cause was an allergy. Unfortunately, I did not react well to hydroxyzine. It did nothing to relieve the rash or the itching. Even though I took it at night, I would feel off the next day, to the point where I literally could not think critically while trying to do my work. It affected me so badly that I accidentally ran a red light. During the two weeks between appointments, I averaged about 4 hours of sleep per night. I had to take sick days off work, and on days I could work, I had erratic hours. (Thankfully, my team, manager, and company graciously accommodated my needs.) I had no relief. The creams did nothing. I even put myself on an elimination diet in a desperate attempt to avoid potential allergens.

If you saw this tweet, now you know the full story behind it:

A Possible Answer

On August 19, 2021, the allergist finally performed a skin prick allergy test. A skin prick test is one of the fastest, easiest ways to reveal common allergies. The nurse drew dots down both of my forearms. She then lightly scratched my skin next to each dot with a plastic nub that had been dunked in an allergen. After waiting 15 minutes, she measured the diameter of each spot to determine the severity of the allergic reaction, if any. She must have tested about 60 different allergens.

The results yielded immediate answers:

- I did not have any of the “Big 8” food allergies.

- I am allergic to cats and dogs, which I knew.

- I am allergic to certain pollens, which I suspected.

- I am allergic to certain fungi, which is no surprise.

Then, there was the major revelation: I am allergic to dust mites.

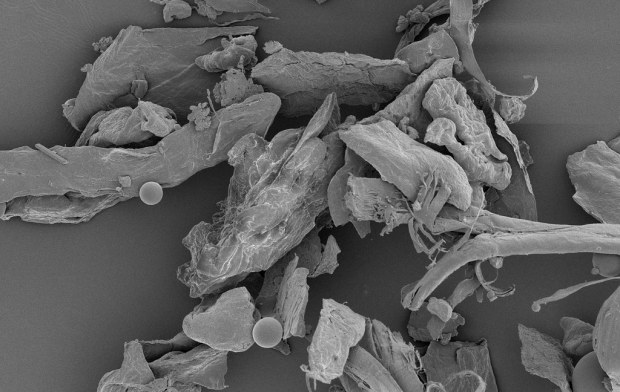

Once I did a little bit of research, this made lots of sense. Dust mites are microscopic bugs that live in plush environments (like mattresses and pillows) and eat dead skin cells. The allergy is not to the mite itself but rather to its waste. They can appear anywhere but are typically most prevalent in bedrooms. My itchiness always seemed strongest at night while in bed. The worst areas on my skin were my face and upper torso, which have the most contact with my bed pillows and covers. Since I sleep in bed every night, the allergic reaction would be recurring and ongoing. No wonder I couldn’t get any relief!

I don’t yet know if eliminating dust mites will completely cure my skin problems, but the skin prick test at least provides hard evidence that I have a demonstrable allergy to dust mites.

Lessons for Software Testing

After nearly two years of suffering, I’m grateful to have a probable root cause for my rash. Nevertheless, I can’t help but feel frustrated that it took so long to find a meaningful answer. As a software tester, I feel like I should have been able to figure it out much sooner. Reflecting on my journey reminds me of important lessons for software testing.

First and foremost, formal testing with wide coverage is better than random checking. When I first got my rash, I tried to address it based on intuition. Maybe I should stop eating mangoes? Maybe I should change my shower soap, or my laundry detergent? Maybe eating probiotics will help? These ideas, while not entirely bad, were based more on conjecture than evidence. Checking them took weeks at a time and yielded unclear results. Compare that to the skin prick test, which took half an hour in total time and immediately yielded definite answers. So many software development teams do their testing more like tossing mangoes than like a skin prick test. They spot-check a few things on the new features they develop and ship them instead of thoroughly covering at-risk behaviors. Spot checks feel acceptable when everything is healthy, but they are not helpful when something systemic goes wrong. Hard evidence is better than wild guesses. Running an established set of tests, even if they seem small or basic, can deliver immense value in short time.

When tests yield results, knowing what is “good” is just as important as knowing what is “bad.” Frequently, software engineers only look at failing tests. If a test passes, who cares? Failures are the things that need attention. Well, passing tests rule out potential root causes. One of the best results from my allergy test is that I’m not allergic to any of the “Big 8” food allergies: eggs, fish, milk, peanuts, shellfish, soy, tree nuts, and wheat. That’s a huge relief, because I like to eat good food. When we as software engineers get stuck trying to figure out why things are broken, it may be helpful to remember what isn’t broken.

Unfortunately, no testing has “complete” coverage, either. My skin prick test covered about 60 common allergens. Thankfully, it revealed my previously-unknown allergy to dust mites, but I recognize that I might be allergic to other things not covered by the test. Even if I mitigate dust mites in my house, I might still have this rash. That worries me a bit. As a software tester, I worry about product behaviors I am unable to cover with tests. That’s why I try to maximize coverage on the riskiest areas with the resources I have. I also try to learn as much about the behaviors under test to minimize unknowns.

Testing is expensive but worthwhile. My skin prick allergy test cost almost $600 out of pocket. To me, that cost is outrageously high, but it was grudgingly worthwhile to get a definitive answer. (I won’t digress into problems with American healthcare costs.) Many software teams shy away from regular, formal testing work because they don’t want to spend the time doing it or pay the dollars for someone else to do it. I would’ve gladly shelled out a grand a year ago if I could have known the root cause to my rash. My main regret is not visiting an allergist sooner.

Finally, test results are useless without corrective action. Now that I know I have a dust mite allergy, I need to mitigate dust mites in my house:

- I need to encase my mattress and pillow with hypoallergenic barriers that keep dust mites out.

- I need to wash all my bedding in hot water (at least 130° F) (or freeze it for over 24 hours).

- I need to deeply clean my bedroom suite to eliminate existing dust.

- I need to maintain a stricter cleaning schedule for my house.

- I need to upgrade my HVAC air filters.

- I need to run an air purifier in my bedroom to eliminate any other airborne allergens.

In the worst case, I can take allergy shots to abate my symptoms.

Simply knowing my allergies doesn’t fix them. The same goes for software testing – testing does not improve quality, it merely indicates problems with quality. We as engineers must improve software behaviors based on feedback from testing, whether that means fixing bugs, improving user experience, or shipping warnings for known issues.

Next Steps

Now that I know I have an allergy to dust mites, I will do everything I can to abate them. I already ordered covers and an air purifier from Amazon. I also installed new HVAC air filters that catch more allergens. For the past few nights, I slept in a different bed, and my skin has noticeably improved. Hopefully, this is the main root cause and I won’t need to do more testing!